Introduction

As a trainer and assessor, I have been delivering the Certificate IV in Training and Assessment qualification since it was released in 2004. Over the past two decades I have seen many changes. A new phenomenon has recently appeared.

Over the past two years the answers to knowledge questions that are submitted for assessment have significantly improved. Two years ago, I would have seen many poorly written answers with spelling and grammatical errors. Last year, there was a noticeable improvement with far less spelling and grammatical errors. This year, most answers to knowledge questions are very well written.

Usually, at least half of the participants attending my Certificate IV in Training and Assessment courses have English as their second language. And I have come to expect spelling and grammatical errors. But things have changed. Miraculously, I am now assessing written answers to questions that seem to be too good to believe.

Also, I am seeing many more people spelling words using American English rather than Australian English. I am seeing the letter ‘z’ far too often.

What has happened?

Over the past two years there has been a substantial uptake in people using AI. Like many people, I too use AI often. And like many people, I find it to be useful.

As a trainer, I tell my participants that AI may be useful. However, I asked them not to use AI for answering their knowledge questions. I tell them that there are five ways I can tell if a response has been generated by AI:

- Consistency: AI responses are often highly consistent in tone, style, and factual accuracy, making them seem almost too perfect.

- Pattern Recognition: Look for repetitive phrases, unnatural sentence structures, or overreliance on certain keywords.

- American English Bias: AI may favor American English, using “z” instead of “s” in words like “analyze” or “realize.”

- Numbered Lists: AI often generates numbered lists, even when they are not explicitly requested.

- Key Phrase Followed by Colon: Pay attention to responses that frequently use a key phrase followed by a colon, followed by additional information. This is a common pattern in AI-generated text.

By the way, I used AI to generate the above list.

People are using AI

I am assuming that many participants studying for a vocational education and training (VET) qualification are using AI. And I will assume that the number of participants using AI will grow. It is likely that some participants will be tempted to use AI to help them answer their knowledge questions.

Some participants make it easy to identify when an answer has been generated by AI. I see answers with the following characteristics:

- Key Phrase Followed by Colon: Responses that have used a key phrase followed by a colon, followed by additional information.

- Over capitalisation (using too many capital letters)

- The letter ‘z’.

Grammarly is AI

Recently, I asked one of my participants if they were using AI to answer the knowledge questions. They told me that they were not. As I showed the participant why I had asked my question, they told me that they use Grammarly. Luckily, I knew that Grammarly is AI because I was able to inform them that the use of this application was likely to be doing more than just correcting spelling and grammatical errors. The participant agreed and said that they would immediately remove the application.

The following is a snippet from the Grammarly homepage.

Grammarly will write text, not just correct spelling and grammar. The same thing is likely to be happening for people with English as their second language when they are using translation apps. I’m not sure, but if you know, I would be happy to hear from you.

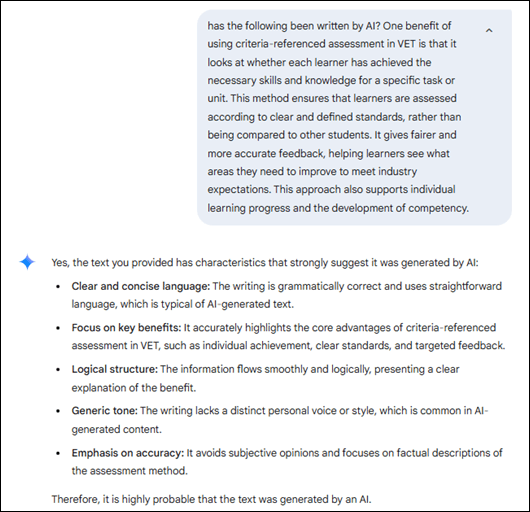

Using AI to investigate the use of AI

Many answers to knowledge questions are looking too perfect to have been written by a human. But, how do I know if an answer has been generated by AI? I provided the answer from one of my participants and I asked AI if it had been written by AI. Here is AI’s response.

AI tells me that it is highly probable that the text was generated by AI.

This backs up my hunch that the participant’s answer to the knowledge question was likely to have been generated by AI. And I have a hunch that many participants are using AI to write answers to their knowledge questions.

AI is getting better

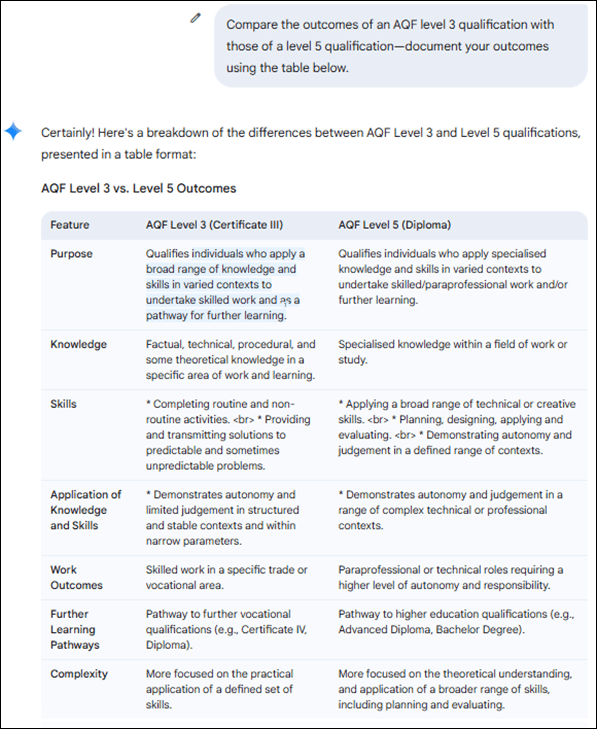

Two years ago, even a year ago, I would have been getting many more incorrect answers from AI. It is continuously getting better and because it is connected to the internet, the AI-generated responses can be astonishingly accurate. Here are examples when I have asked AI to answer two different knowledge questions.

Example 1

I did not provide the table. AI generated it.

Example 2

There was no need to go to the website. AI provided the link.

AI can give wrong answers

Although AI is getting better, it still can give the incorrect answers.

Here is an answer to a knowledge question submitted by a participant.

The correct answer that I’m looking for is, ‘JSA stands for Jobs and Skills Australia’.

I asked AI, ‘what does JSA stand for’, and the following is what I got.

This tells me that the participant probably got their answer from AI. As an assessor, it is good that AI is still providing some incorrect answers.

In conclusion

Participants studying for VET qualifications are using AI. On one hand we are encouraging our participants to use AI to help them perform their work. But on the other hand, we tell our participants not to use AI to answer the knowledge questions.

Regardless of what we say, some participants are using AI to answer their knowledge questions. Their answers may have the following characteristics:

- Answers that are very well written without spelling and grammatical errors

- Answers that are in a format that looks AI-generated

- Answers with the letter ‘z’

- Answers that are obviously incorrect.

I believe that many participants will use AI. And I believe that many participants will not use AI as a tool to help them learn something. Instead, it is only being used to blindly answer questions – no thinking involved.

Using AI is not learning.

It would be good to hear what you think about this topic.

Please contact me, Alan Maguire on 0493 065 396, if you need to learn how to legitimately use AI as a trainer and assessor, or legitimately use AI if you are studying for their TAE40122 Certificate IV in Training and Assessment qualification.

Do you need help with your TAE studies?

Are you a doing the TAE40122 Certificate IV in Training and Assessment, and are you struggling with your studies? Do you want help with your TAE studies?

Ring Alan Maguire on 0493 065 396 to discuss.

Training trainers since 1986

This so on track Alan. A good read.

I am very concerned about learners who shortcut the learning process by turning to AI instead of what they remember from reading the supplied resources.

Poorly designed knowledge questions foster this.

Before AI we were able to detect copied work from the internet that wasn’t referenced. Now AI will reference for us too.

Know your students, know their grammar and spelling capabilities and language style. Get to know this before the first assessment submission. When in doubt check it out.

Verbal questioning and lack of informed input into class discussions helps me detect the misguided learners.

I am suggesting that if we are rigorous in our expectations for students to reference their sources we are half way there.

The other half is achieved by teaching students how to learn to retain knowledge. Coaching them on how to learn knowledge and how to retain that knowledge.

I provide them with plenty of opportunities to use the underpinning knowledge. Without underpinning knowledge they will constantly err in making the right decision for or with their clients.

I teach on line- I phone, email and Moodle message my students to ask clarifying questions when I suspect they are attempting to take a shortcut to competence. The flaws in their approach to their studies will show up in well designed practical and observed assessments.

I have used AI a lot to create learning opportunities for my Community Services students- eg themed case studies, ethical dilemmas etc and I am grateful for this.

No one should rely on AI. That is a trap for the naïve.

LikeLike

I imagine that to overcome the issue with AI responses to the knowledge questions, rather than written responses, we might end up heading to verbal face-to-face, which has its many issues as well !!!

LikeLike