As part of the TAE Training Package review, a new e-learning unit has been drafted. The title of of the proposed unit is TAEDEL405 Plan, organise and facilitate e-learning.

PwC’s Skills for Australia is currently seeking our feedback about this draft. The following is my feedback about the unit, and I would be happy to hear your comments before I submit it.

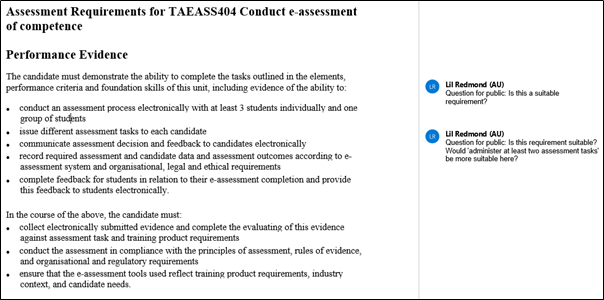

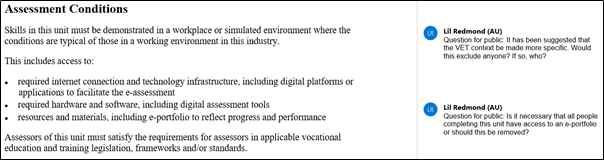

(You may also like to read an article that I have previously posted about another proposed unit with the title of TAEASS404 Conduct e-assessment of competence.)

Types of e-learning

There are different types of e-learning. Often, e-learning is referred to being synchronous and asynchronous. The following table broadly describes two types of e-learning.

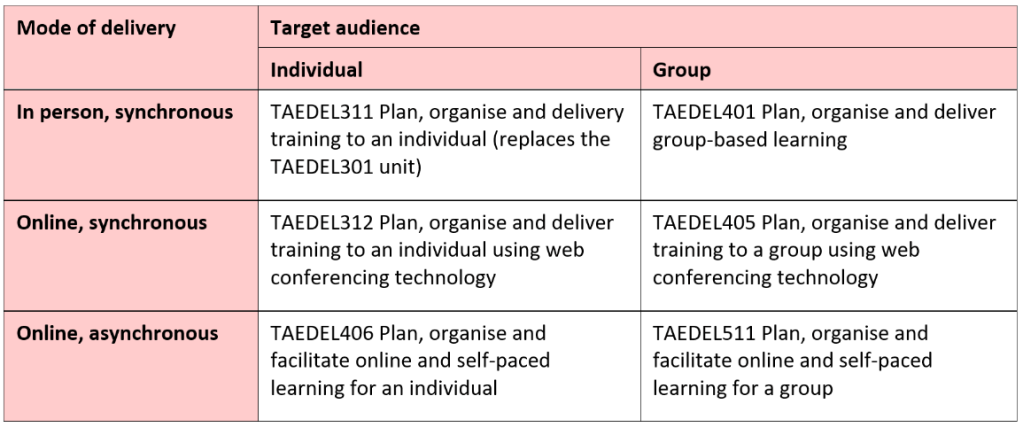

The knowledge and skills required for facilitating self-paced e-learning (asynchronous) are different to those required for facilitating group-based e-learning (synchronous) . Also, the tasks performed are different. I think it would be best to cover these two types of e-learning by two different units of competency.

Is there a need for a new e-learning unit of competency?

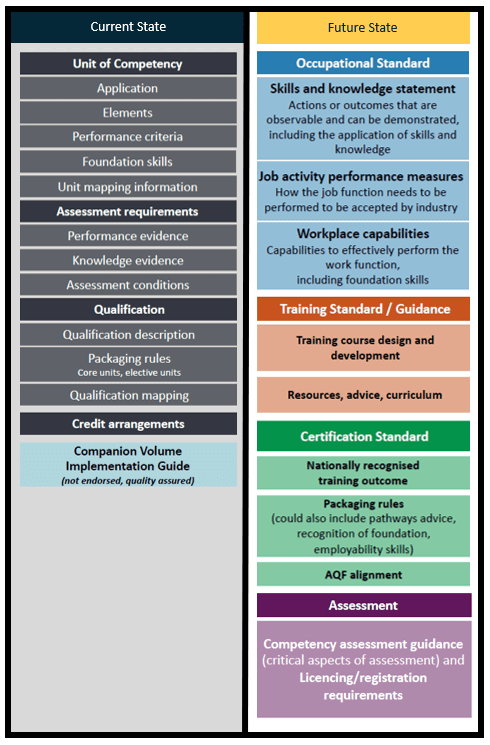

Synchronous and asynchronous e-learning are different tasks, and each type of e-learning requires different knowledge and skills. I recommend having two units instead of one. One unit covering synchronous e-learning, and another covering asynchronous e-learning. Also, delivering synchronous e-learning to an individual is different to delivering synchronous e-learning to a group. Delivery to a group requires a higher level of skills compared with delivery to an individual.

The following is my recommendation. The unit codes and titles should be read as indicative for the purpose of this example.

Note: Learning to use web conferencing technology is relatively quick and easy.

TAEDEL405 Plan, organise and facilitate e-learning (draft)

Is the TAEDEL501 Facilitate e-learning unit of competency being down-graded to become the TAEDEL405 Plan, organise and facilitate e-learning units of competency?

The TAEDEL405 Plan, organise and facilitate e-learning unit of competency seems to be a rewrite of the current TAEDEL501 Facilitate e-learning unit of competency. Therefore, it appears that the TAEDEL501 unit is being down-graded to become the TAEDEL405 unit. However, this is my assumption. Down grading or lowering the skill level of the TAEDEL501 unit seems unnecessary and a waste of time and effort.

Feedback about the elements and performance criteria

The following table provides summary of how the draft TAEDEL405 unit differs from the current TAEDEL501 unit.

Note: The changes to the TAEDEL501 units are trivial or superficial.

Feedback about the foundation skills

A candidate will be required to demonstrate the ability to complete the tasks outlined in the elements, performance criteria and foundation skills (as per the Performance Evidence statement). This makes the foundation skills assessable.

Analysis and comprehensive feedback about the foundation skills would require much more time and effort. However, I believe that a critical analysis of the foundation skills should be done prior to implementation.

Feedback about the performance evidence

The following are the performance evidence.

I have many questions about the performance evidence:

- What is one complete program of learning? What is a session? And what program of learning has only three sessions?

- What is a synchronous e-learning session? Is it a training session delivered via a web conferencing platform?

- Is a group of four students large enough? How many students are normally in a group attending a synchronous e-learning session? Who decide on the size of the group? From my experience, at least eight students would be a more realistic number.

- Why are learners being referred to as students? Will this prohibit the unit from being used for community-based training providers, enterprise-based training providers and non-VET training providers who do not have ‘students’?

- What is an asynchronous e-learning session? The concept of sessions for asynchronous e-learning seems odd.

Currently, the TAELLN411 unit adequately covers the identifying and addressing LLN (and D) needs. This does not have to integrated into another unit of competency.

I think the scope of the draft TAEDEL405 Plan, organise and facilitate e-learning unit of competency should be split over two or more units. For example, one unit covering the delivery of synchronous learning, and another unit covering the delivery of asynchronous learning. One unit of competency to address the range of e-learning types is too ambitious.

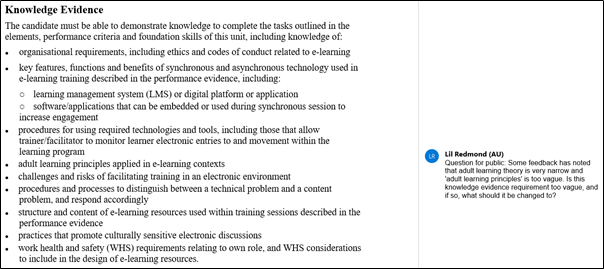

Feedback about the knowledge evidence

The following are the knowledge evidence.

Analysis and comprehensive feedback about the foundation skills would require much more time and effort. However, I believe that a critical analysis of the foundation skills should be done prior to implementation.

The breadth and depth of knowledge required for this unit seems to be at a higher AQF level. And I would like to know, ‘what is the source document for the code of conduct related to e-learning?’

Feedback about the assessment conditions

The following are the assessment conditions.

I am unsure what is meant by an ‘e-portfolio to reflect progress and collect evidence’. I hope the the term ‘e-portfolio’ is removed.

In conclusion

Is there a need for a new e-learning unit of competency?

The draft TAEDEL405 Plan, organise and facilitate e-learning unit of competency duplicate the current TAEDEL501 Facilitate e-learning unit of competency. Therefore, there is no need for this new e-learning unit. The draft TAEDEL405 Plan, organise and facilitate e-learning unit of competency should be scrapped.

Instead of the proposed one new e-learning unit of competency, I would suggest looking at several new e-learning units of competency. For example:

- Plan, organise and deliver training to an individual using web conferencing technology

- Plan, organise and deliver training to a group using web conferencing technology

- Plan, organise and facilitate online and self-paced learning for an individual

- Plan, organise and facilitate online and self-paced learning for a group

If the TAEDEL405 unit is implemented, will the TAEDEL501 unit be removed from the TAE Training Package? Is the aim to downgrade the skill level of the current TAEDEL501 unit? (So many unanswered questions.)

Please let me know what you think.