The current Australian VET system was implemented in 1992. Since it was implemented, there has been many changes. One of those changes is how units of competency have been documented. The initial units of competency looked very different to today’s units of competency.

The first training packages in the Australian VET system were endorsed in 1997. This began the standardisation of units of competency across the different industry sectors. It should be noted that ‘standardisation’ has never resulted in all units of competency looking the same. There have always been some variations.

In 2012, a ‘new’ format for units of competency was introduced. The Standards of Training Packages specified this new format.

The changes specified by the Standards of Training Packages included:

- Inclusion of Foundation Skills

- Separation of the Unit of Competency and Assessment Requirements (to separate documents).

It took ten years before all units of competency complied with the 2012 Standards of Training Packages.

And this year, effective from the 1st of July 2025, there will be another ‘new’ format for units of competency. However, to add to the complexity of the Australian VET system, there will be two new formats for units of competency, rather than one. One of the new formats is very similar to the current format, but the other new format looks more like a description of curriculum, rather than a description of competency. This is turning back the clock to before 1992, because it was 1992 when competency-based training and assessment was being introduced to replace the failing curriculum-based system.

These changes are specified by the Training Package Organising Framework.

An example: changing units of competency

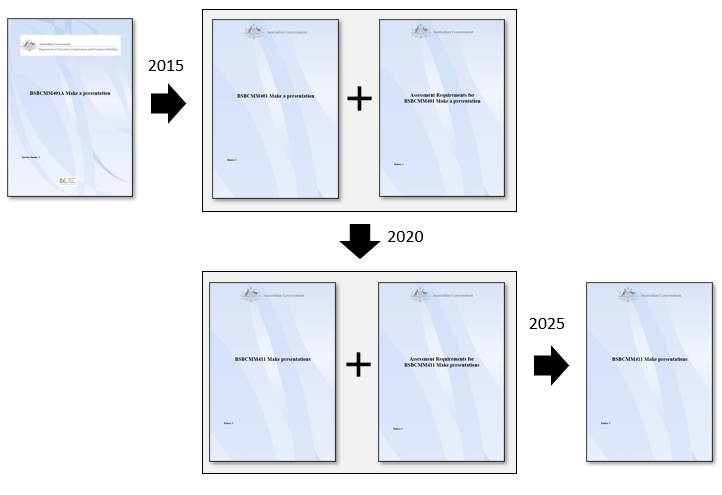

The ability to make presentations has not significantly changed over the past decade. However, the relevant unit of competency was updated in 2015, and updated again in 2020. Why did we need to change from the 2015 BSBCMM401 Make a presentation unit to the 2020 BSBCMM411 Make presentations unit? Let’s quickly compare these two units.

The first difference is that the BSBCMM401 Make a presentation unit is singular, and the BSBCMM411 Make presentations unit is plural. Singular refers to making one presentation, while plural refers to making more than one presentation. It could be argued that if you can competently make a presentation, you would have the ability to make another presentation.

The second difference which follows on from the first difference relates to the performance evidence. The performance evidence for the BSBCMM411 Make presentations specifies the delivery of two presentations, while the performance evidence for the BSBCMM401 Make a presentation unit specified that at least one presentation was delivered. This change is underwhelming.

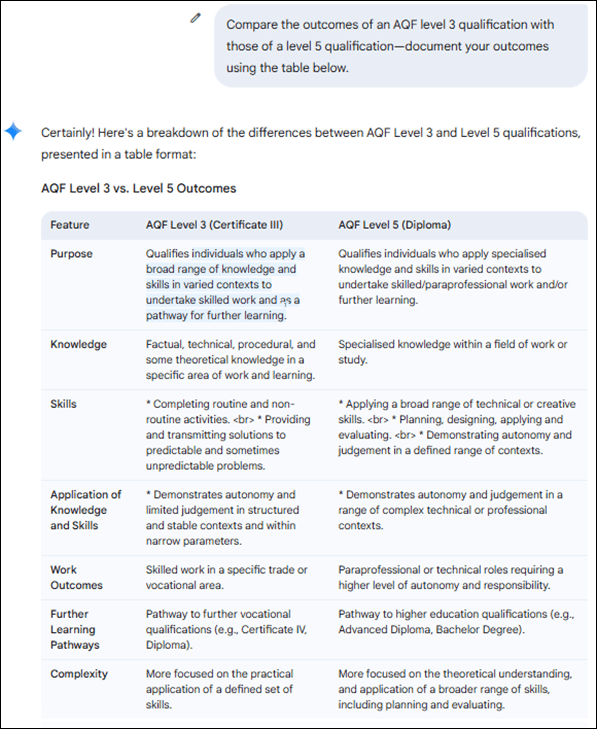

The following table compares the elements and performance criteria for the two units.

The above shows that there are three differences:

- Performance criteria 1.4 for the BSBCMM401 unit has been removed

- Rewording has reduced the size of some performance criteria, and in some cases, this makes the performance criteria easier to read

- The number of performance criteria for Element 2 has been reduced from six to three.

This last point about a reduced number of performance criteria is deceptive because two of the three performance criteria that have removed from Element 2 are covered by the Foundation Skills for the BSBCMM411 unit. Overall, the change from BSBCMM401 to BSBCMM411 made a slight improvement. It is debatable that the change was necessary.

Déjà vu: changing units of competency

People who are new to the Australian VET system may not experience it, but many people who have been around for a while may experience déjà vu relating to the current and future changes to the units of competency. One document became two documents, then two documents have become one document again.

In 2012, a unit of competency was one document with two parts:

- Unit of Competency

- Evidence Guide.

After 2012, we introduced a ‘new’ format for units of competency consisting of two documents:

- Unit of Competency

- Assessment Requirements (replaced the Evidence Guide).

In April 2025, the training.gov.au website combined the two parts of the units of competency into one document again. This document has two parts:

- Unit of Competency

- Assessment Requirements.

The following illustrates how the make presentations unit has changed over the past decade, from one document to two documents, and back to being one document.

This recent change to a ‘one document format’ is consistent with the format for units of competency specified by the Training Package Organising Framework.

In conclusion

It seems that much effort goes into making changes, and the implementation of every change costs money and consumes valuable resources. Units of competency changing from one document to two documents, and back to one document may be considered trivial.

But be aware, the change from competency-based training and assessment to curriculum-based training and assessment is significant. Especially, if the providers of training and assessment begin to determine the curriculum, rather than industry and employers determining the competencies.

We seem to be returning the Australian VET system back to before 1992. It wasn’t great then, and it won’t be great for our future.